Section: New Results

Computer-Assisted Design with Heterogeneous Representations

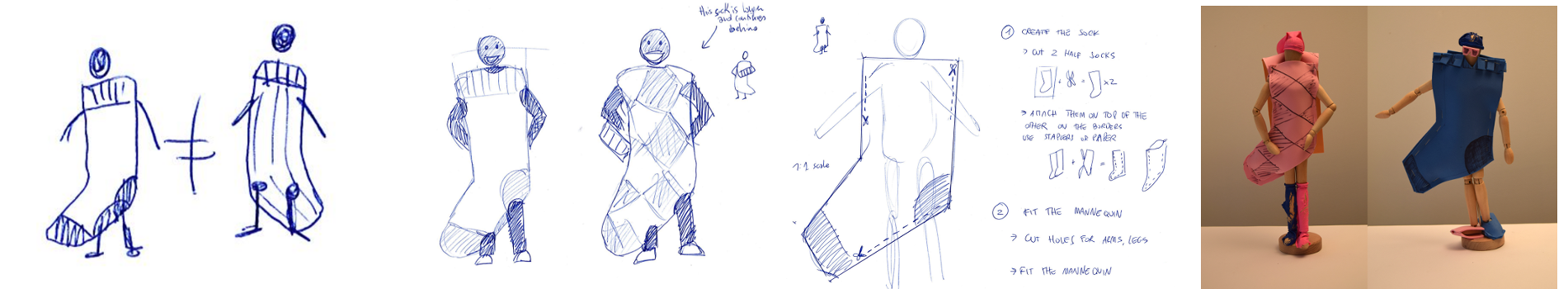

How Novices Sketch and Prototype Hand-Fabricated Objects

Participant : Adrien Bousseau.

We are interested in how to create digital tools to support informal sketching and prototyping of physical objects by novices. Achieving this goal first requires a deeper understanding of how non-professional designers generate, explore, and communicate design ideas with traditional tools, i.e., sketches on paper and hands-on prototyping materials. We conducted a study framed around two all-day design charrettes where participants perform a complete design process: ideation sketching, concept development and presentation, fabrication planning documentation and collaborative fabrication of hand-crafted prototypes (Figure 4). This structure allows us to control key aspects of the design process while collecting rich data about creative tasks, including sketches on paper, physical models, and videos of collaboration discussions. Participants used a variety of drawing techniques to convey 3D concepts. They also extensively manipulated physical materials, such as paper, foam, and cardboard, both to support concept exploration and communication with design partners. Based on these observations, we propose design guidelines for CAD tools targeted at novice crafters.

This work is a collaboration with Theophanis Tsandilas, Lora Oehlberg and Wendy Mackay from the ExSitu group, Inria Saclay. It has been published at ACM Conference on Human Factors in Computing Systems (CHI) 2016 [9].

|

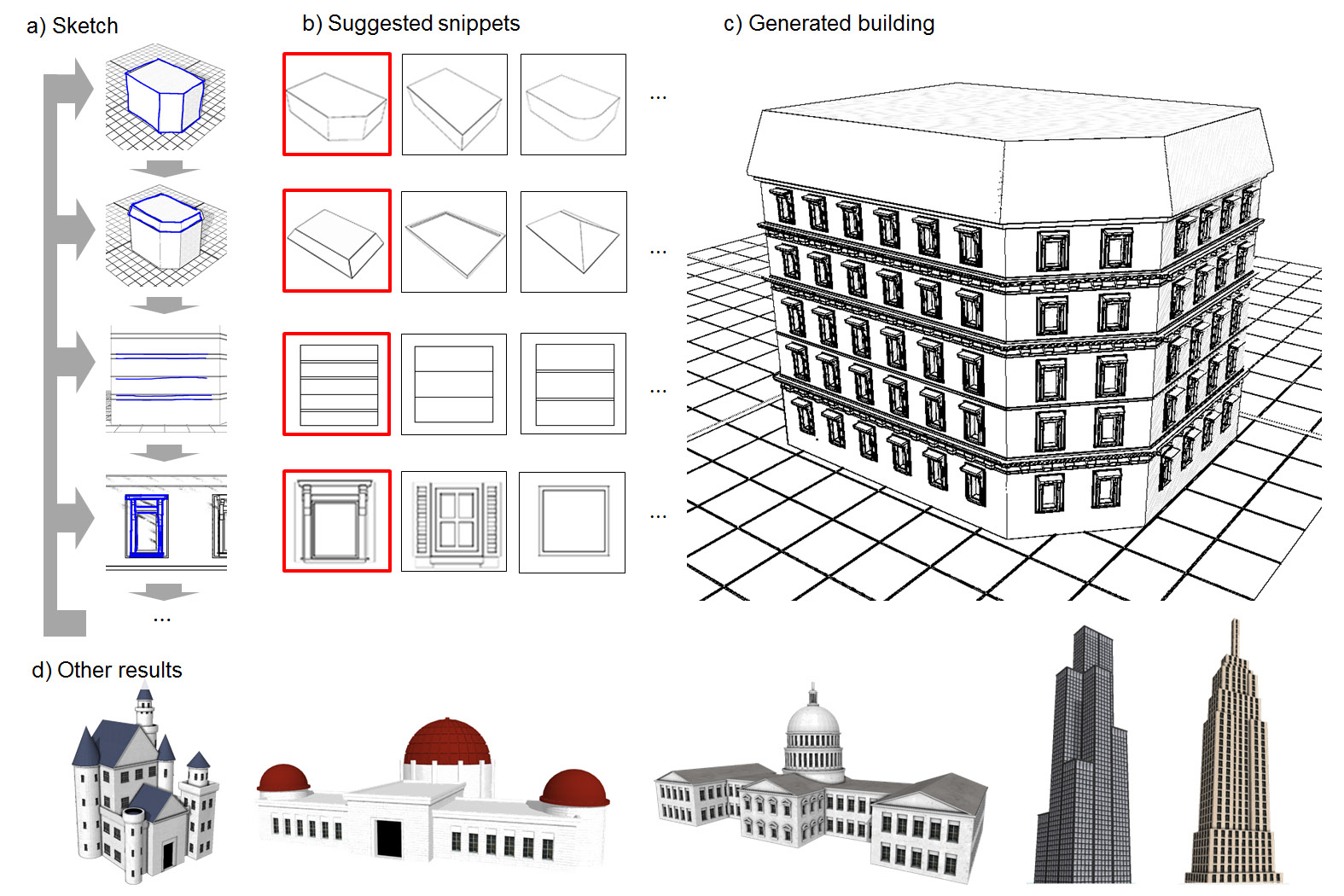

Interactive Sketching of Urban Procedural Models

Participant : Adrien Bousseau.

3D modeling remains a notoriously difficult task for novices despite significant research effort to provide intuitive and automated systems. We tackle this problem by combining the strengths of two popular domains: sketch-based modeling and procedural modeling. On the one hand, sketch-based modeling exploits our ability to draw but requires detailed, unambiguous drawings to achieve complex models. On the other hand, procedural modeling automates the creation of precise and detailed geometry but requires the tedious definition and parameterization of procedural models. Our system uses a collection of simple procedural grammars, called snippets, as building blocks to turn sketches into realistic 3D models. We use a machine learning approach to solve the inverse problem of finding the procedural model that best explains a user sketch. We use non-photorealistic rendering to generate artificial data for training convolutional neural networks capable of quickly recognizing the procedural rule intended by a sketch and estimating its parameters. We integrate our algorithm in a coarse-to-fine urban modeling system that allows users to create rich buildings by successively sketching the building mass, roof, facades, windows, and ornaments (Figure 5). A user study shows that by using our approach non-expert users can generate complex buildings in just a few minutes.

This work is a collaboration with Gen Nishida, Ignacio Garcia-Dorado, Daniel G. Aliaga and Bedrich Benes from Purdue University. It has been published at ACM Transactions on Graphics (proc. SIGGRAPH) 2016 [8].

|

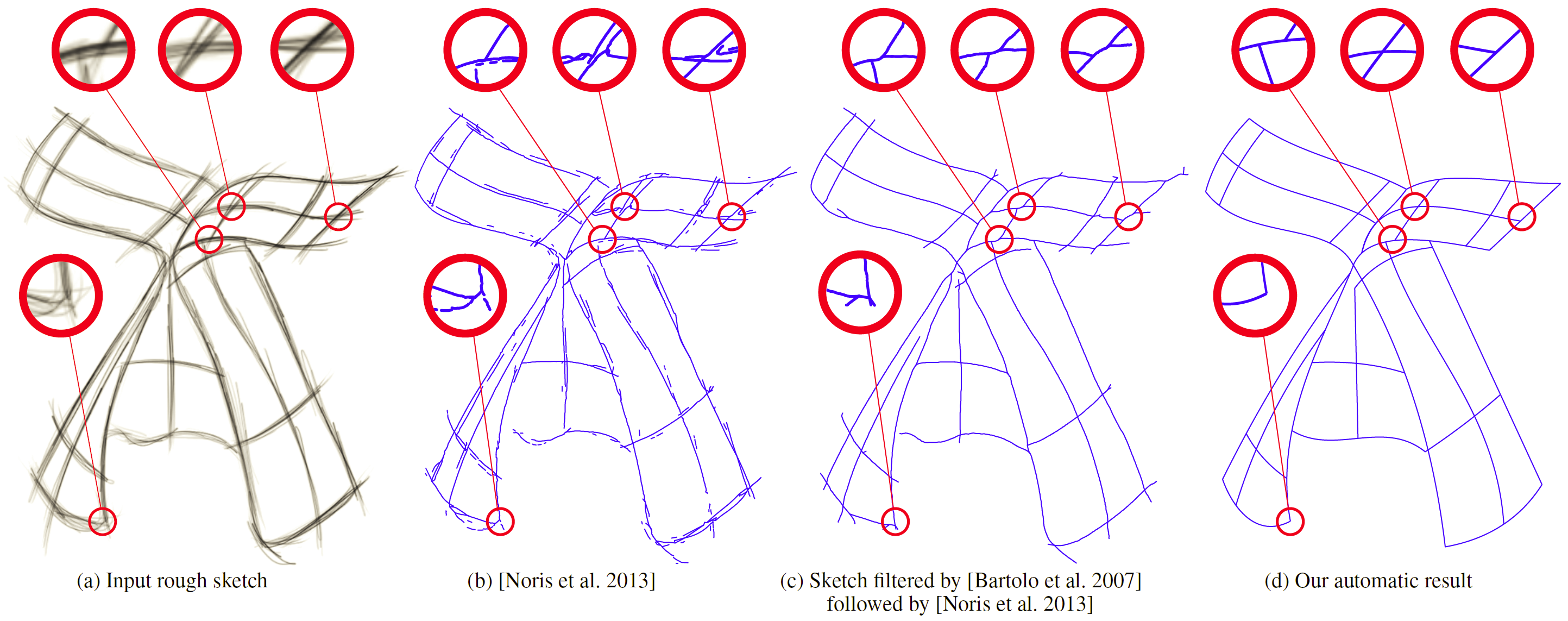

Fidelity vs. Simplicity: a Global Approach to Line Drawing Vectorization

Participant : Adrien Bousseau.

Vector drawing is a popular representation in graphic design because of the precision, compactness and editability offered by parametric curves. However, prior work on line drawing vectorization focused solely on faithfully capturing input bitmaps, and largely overlooked the problem of producing a compact and editable curve network. As a result, existing algorithms tend to produce overly-complex drawings composed of many short curves and control points, especially in the presence of thick or sketchy lines that yield spurious curves at junctions. We propose the first vectorization algorithm that explicitly balances fidelity to the input bitmap with simplicity of the output, as measured by the number of curves and their degree. By casting this trade-off as a global optimization, our algorithm generates few yet accurate curves, and also disambiguates curve topology at junctions by favoring the simplest interpretations overall. We demonstrate the robustness of our algorithm on a variety of drawings, sketchy cartoons and rough design sketches (Figure 6).

The first author of this work, Jean-Dominique Favreau, is co-advised by Adrien Bousseau and Florent Lafarge (Titane team). The work was published at ACM Transactions on Graphics (proc. SIGGRAPH) 2016 [5].

|

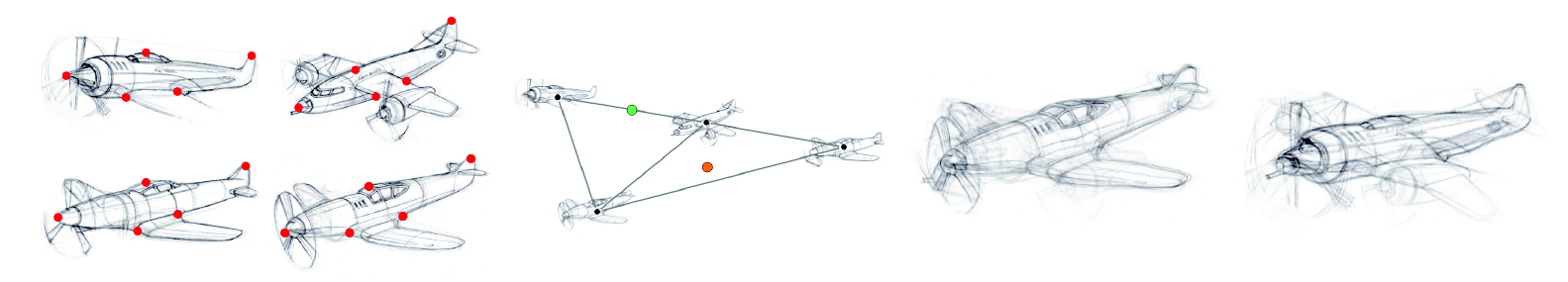

SketchSoup: Exploratory Ideation using Design Sketches

Participant : Adrien Bousseau.

A hallmark of early stage design is a number of quick-and-dirty sketches capturing design inspirations, model variations, and alternate viewpoints of a visual concept. We present SketchSoup, a workflow that allows designers to explore the design space induced by such sketches. We take an unstructured collection of drawings as input, register them using a multi-image matching algorithm, and present them as a 2D interpolation space (Figure 7). By morphing sketches in this space, our approach produces plausible visualizations of shape and viewpoint variations despite the presence of sketch distortions that would prevent standard camera calibration and 3D reconstruction. In addition, our interpolated sketches can serve as inspiration for further drawings, which feed back into the design space as additional image inputs. SketchSoup thus fills a significant gap in the early ideation stage of conceptual design by allowing designers to make better informed choices before proceeding to more expensive 3D modeling and prototyping. From a technical standpoint, we describe an end-to-end system that judiciously combines and adapts various image processing techniques to the drawing domain – where the images are dominated not by color, shading and texture, but by sketchy stroke contours.

This work is a collaboration with Rahul Arora and Karan Singh from University of Toronto and Vinay P. Namboodiri from IIT Kampur. The project was initiated while Rahul Arora was an intern in our group. It will be published in Computer Graphics Forum in 2017.

|

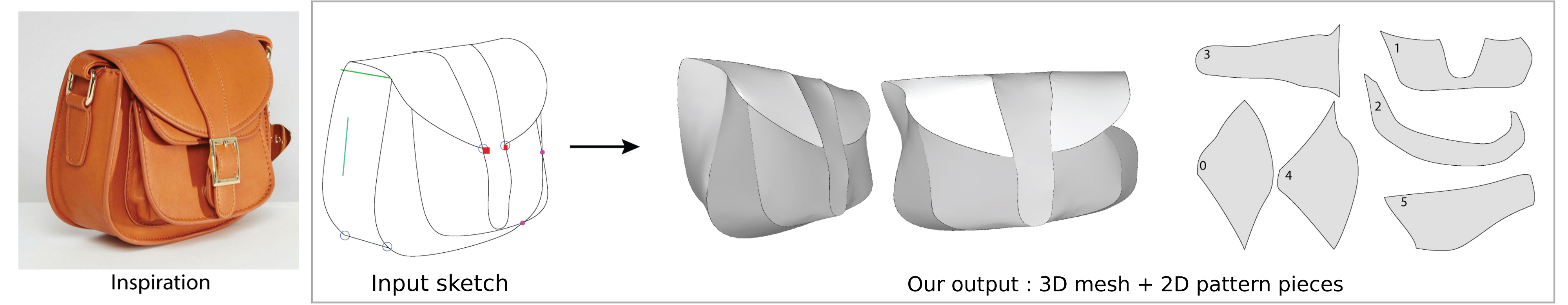

Modeling Symmetric Developable Surfaces from a Single Photo

Participants : Amelie Fondevilla, Adrien Bousseau.

We propose to reconstruct 3D developable surfaces from a single 2D drawing traced and annotated over a side-view photo of a partially symmetrical object (Figure 8). Our reconstruction algorithm combines symmetry and orthogonality shapes cues within a unified optimization framework that solves for the 3D position of the Bézier control points of the drawn curves while being tolerant to drawing inaccuracy and perspective distortions. We then rely on existing surface optimization methods to produce a developable surface that interpolates our 3D curves. Our method is particularly well suited for the modeling and fabrication of fashion items as it converts the input drawing into flattened developable patterns ready for sewing.

This work is a collaboration with Damien Rohmer, Stefanie Hahmann and Marie-Paule Cani from the Imagine team (LJK/ Inria Grenoble Rhône Alpes). This work was presented at the AFIG French conference in November 2016, where it received the 3rd price for best student work.

|

DeepSketch: Sketch-Based Modeling using Deep Volumetric Prediction

Participants : Johanna Delanoy, Adrien Bousseau.

Drawing is the most direct way for people to express their visual thoughts. However, while humans are extremely good are perceiving 3D objects from line drawings, this task remains very challenging for computers as many 3D shapes can yield the same drawing. Existing sketch-based 3D modeling systems rely on heuristics to reconstruct simple shapes, require extensive user interaction, or exploit specific drawing techniques and shape priors. Our goal is to lift these restrictions and offer a minimal interface to quickly model general 3D shapes with contour drawings. While our approach can produce approximate 3D shapes from a single drawing, it achieves its full potential once integrated into an interactive modeling system, which allows users to visualize the shape and refine it by drawing from several viewpoints. At the core of our approach is a deep convolutional neural network (CNN) that processes a line drawing to predict occupancy in a voxel grid. The use of deep learning results in a flexible and robust 3D reconstruction engine that allows us to treat sketchy bitmap drawings without requiring complex, hand-crafted optimizations. While similar architectures have been proposed in the computer vision community, our originality is to extend this architecture to a multiview context by training an updater network that iteratively refines the prediction as novel drawings are provided

This work is a collaboration with Mathieu Aubry from Ecole des Ponts ParisTech and Alexei Efros and Philip Isola from UC Berkeley. It is supported by the CRISP Inria associate team.